Meet the Bob, Web Analyst.

He lives in the GCP kingdom and is always busy. It is his duty to extract value from GA4 data. So he uses BigQuery, Dataform, Cloud Functions, Pub/Sub, Cloud Logging, and all these superpowers to bake the data.

But he has to fight his worst enemy, Clicks.

These little, dirty monsters are everywhere. If he needs to set up Data Cleaning, Transformation, Scheduling, Monitoring, Predictions. He will meet hundreds of clicks. And they are really troublemakers:

- Forget a few clicks (give some permissions, or enable API) and pipeline wouldn’t work

- All clients are different, and you need to remember which clicks are right for each clients

- They have no memories, and sometimes Bob himself can’t explain why they changed or needed it in this exact way.

- Clicks could take control over Bob’s junior team members and force them to start clicking all unclickable buttons and places

- They need to create step-by-step documentation, and it practically always outdated

- And of course Clicks eat people’s brains and energy and Bob has no time to create something cool (like stories).

That’s why Bob decided to save the world and fight Clicks.

Google Cloud CLI

The first thing to rescue is a console. God save the console. He installed the Google Cloud CLI and started creating gcloud commands. He learned these simple tricks:

- Use gcloud config configurations commands to switch between projects.

- Defining all magic constants as environment variables helped him to reuse the same command for different clients and tasks.

- Get a GCP resource state and properties using describe or info commands like this:Or even more beutifull:

gcloud info --format='value(config.project)'Or define environment variables:gcloud info --format=table"[box=true](config.project:label=PROJECT_ID)"export project_id=$(gcloud info --format='value(config.project)') echo ${project_id}

Console is great, it dramatically reduces Clicks but it also has these cons:

- There is still a lot of manual work, which leads to mistakes

- You need documentation for each client

- There’s no fast way to check the current state and find what’s missing

- You have to be born in the 1980s or even earlier to treat it like a user-friendly interface.

Terraform

Bob moved forward and met Terraform. That made his life much better, he could describe configuration, enable APIs, deploy cloud functions and more with only one gentle button press (practically not even a click).

For example Terraform helps enable Dataform with this resource:

| |

Here Bob provides a link to a GitHub repository, and secrets with a GitHub token

Also, Bob splitted one configuration into modules. And define a variable with a list of needed modules, like this:

| |

And based on this variable, Terraform enables only the needed modules. Like this

| |

The trick is if the count is 0, Terraform will not enable the module. So if a particular client needs a Dataform, Bob adds it into the modules_list, and Terraform setup all the needed resources.

Terraform has a lot of very helpful modules, for example, terraform-google-modules/event-function/google which helps Bob create Cloud Functions triggered by Pub/Sub events, like this:

| |

Please remember that name should be globally unique, for example you could use project_id as a prefix. And specify Pub/Sub topic in event_trigger.resource.

It’s possible to use Terraform for managing GitHub repositories — create, clone, and copy code from one repository to another. But anyway, Bob had to manually create fine-granted GitHub tokens for new repositories. And also, it looks like Terraform was never married; if something went wrong, it tried to destroy the resource and create a new one - bad tactic for marriage and repositories. So Bob decided to use Terraform only for GCP.

But Bob wanted all the team to use Terraform so he decided to move to Clouds and to GitHub Actions.

GitHub Actions

Now he could use one Git repository with Terraform for all clients. In GitHub workflow .yaml file he added input with the project name:

| |

Based on this input, create or select a Terraform Workspace.

| |

Change a Terraform configuration variables:

| |

Here script runs apply command using -var-file options pointing to a client specific variables.

Switch service accounts and secrets based on selected projects.

| |

Also Bob created a Cloud Storage bucket in his own GCP and saved all clients’ states in his own project, like this:

| |

In this snippet Bob uses an additional service account file that has access only to Bob’s project and not to clients.

So now if for any reason Clicks capture the client project and generate random changes, Bob could find / revert any changes easily by running Terraform plan or apply commands like this:

| |

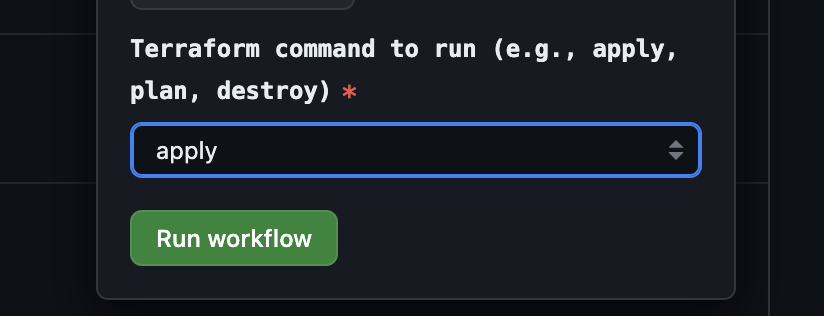

And select the right command in the GitHub UI:

One more benefit: Clouds could keep secrets. So you can define the secrets on GitHub, pass them as files or as variables in Terraform and create secrets in GCP using Secret Manager. Something like this:

| |

Happy end (not for Clicks)

It was an interesting way to Clouds. Bob is more or less happy, and of course there are still a few Clicks and a lot of potential improvements, but:

- All infrastructure is under Terraform control. He can’t miss the steps, as they are all in the Terraform configuration files

- Different clients have their own configuration files, and it’s much easier to keep everything in an up-to-date state

- Every modification is in the same Git repository, making it simple to identify who and why made changes

- The code is a better version of the documentation, as all needed resources are well defined and Bob could easily compare expectations with reality

- Bob can deploy pipelines for new clients much faster and easier and spend his precious time on something valuable, not on Clicks..

Many thanks for reading. If you have any technical questions or if you decide to reduce your Carbon Clicks Footprint please message me on LinkedIn.

P.S. My love to my daughter, who drew all these funny characters. She personally believes that Bob should look like this:

P.P.S Thanks to Mårten for his inspiring presentation about storytelling.